Contents

Auditing Role Changes

I had recently been asked to figure out a way to audit Azure Active Directory (AAD, AzAD) Role changes — such as the Global Administrator — using a SIEM (security information and event management). The Azure portal only provides 1 month’s Role Management history, and being able to query a SIEM, such as Splunk, would allow a security professional to go back further during the course of his or her investigation and auditing.

There are two immediate ways to audit role changes that do not require coding skills:

“Eligible” vs “Permanent” Roles: ” If a user has been made eligible for a role, that means they can activate the role when they need to perform privileged tasks. There’s no difference in the access given to someone with a permanent versus an eligible role assignment. The only difference is that some people don’t need that access all the time.” [Source: Privileged Identity Management (PIM)]

Note on Audit Log

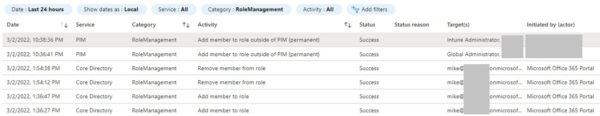

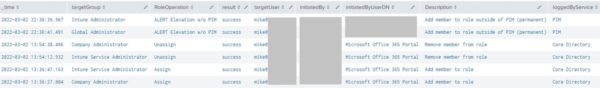

During the course of writing this article, I found that when a Role membership was modified, the Core Directory service would log that action with the correct timestamp. The PIM service may later also trigger an alert if said operation was done outside of its domain — generally within about 2 minutes. However, I had recently come across a severely DELAYED alert by the PIM service that could cause unnecessary panic for auditors and the security team. The below screenshot is an example of such alert occurring nearly 9 HOURS after role changes were made:

There also was this odd alert that occurred 30 hours later:

Why would a delayed alert be a problem?

Potentially false alarm and unnecessary escalation. Let’s look at the below screenshot as an example:

The Core Directory service correctly notified an auditor that the “Global Administrator” role had its membership modified at 1:36pm (Assign – Role granted) and 1:54pm (Unassign – Role removed), but PIM followed up with an alert of its own 9 HOURS later at 10:36pm (Alert). The auditor may subsequently panic late that evening that a “Global Administrator” had been assigned and escalate the situation. The security team looked at the “Global Administrator” role and found that user “mike” did not possess that access (it was removed at 1:54pm). Also notice there was only one (success-ful) alert entry from PIM at 10:36pm for a member having been added outside of its service. There was no additional one about the role having been removed. Perhaps PIM only cares if access was granted?

I have not yet found the reason for why PIM would alert so late after an action was taken. Maybe Azure AD was overwhelmed with log synchronization at that time? Seems like a misstep on Microsoft’s part to not prioritize IAM-related alerts. If you know why, please share in the Comments!

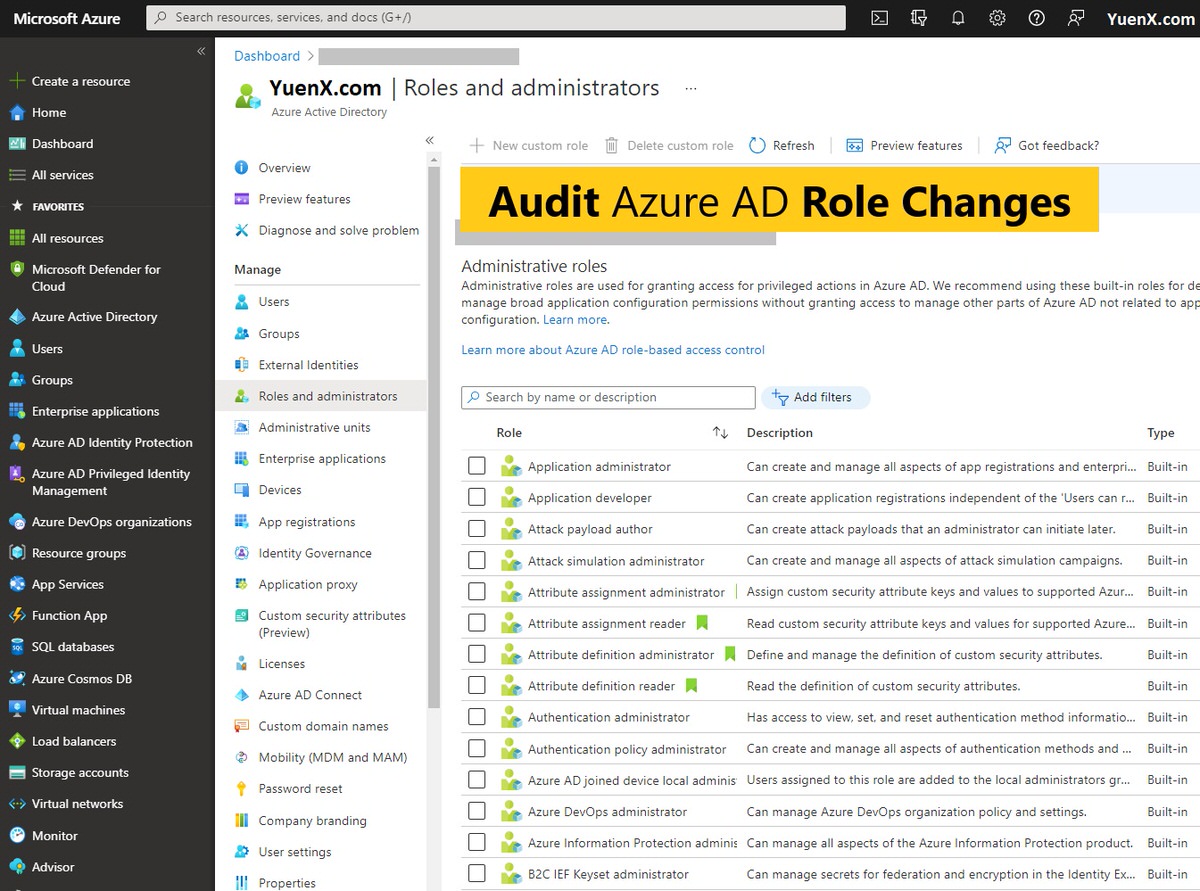

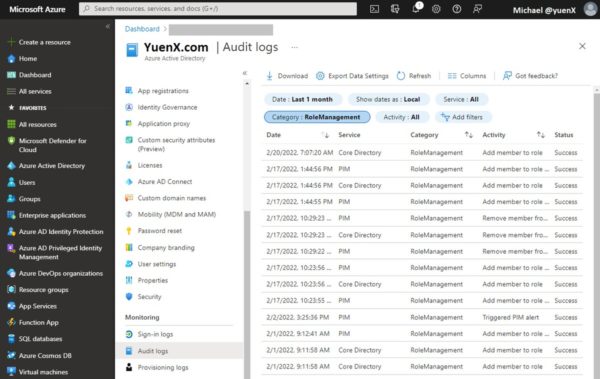

Azure Active Directory Portal

To view Role changes in the AzureAD Portal, go to Azure Active Directory > Monitoring > Audit Logs. From there, change the Category to “Role Management“. Below is a sample output. Azure will not allow you to change the Date range past the last 1 month.

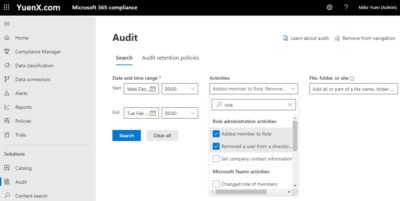

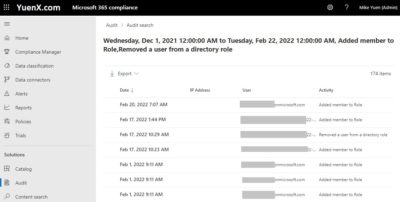

Microsoft 365 Compliance Center

To view Role changes in the M365/O365 Compliance Center, go to Microsoft 365 Compliance > Solutions > Audit. Under Activities, type “role” and select “Added member to Role” and “Removed a user from a directory role“. Unlike the Azure AD Portal, you can have the date go back further than the last 1 month. The Audit search will take some time to complete and so feel free to go grab yourself a cup of coffee.

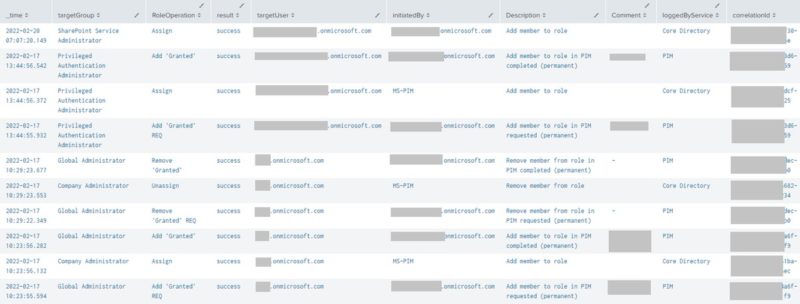

Splunk (SIEM)

Here is the query I formulated to audit role changes. It was interesting to see what happens on the Azure AD backend when a user is added to or removed from a given Role, and how the request is processed by PIM or the Core Directory service. Furthermore, the event logs were not presented in a consistent way, requiring the query to account for some variations I had come across.

index=YuenX_Azure sourcetype="azure:aad:audit"

| spath category | search category=RoleManagement

| spath "initiatedBy.user.userPrincipalName" output="initiatedByUserUPN" | spath "initiatedBy.user.displayName" output="initiatedByUserDN" | spath "initiatedBy.app.displayName" output="initiatedByApp"

| spath "targetResources{}.displayName" output="targetGroup" | spath "targetResources{}.displayName" output="targetUserDN" | spath "targetResources{}.userPrincipalName" output="targetUserUPN"

| spath "targetResources{}.modifiedProperties{}.oldValue" output="targetGroup_OpType_Unassign" | eval targetGroup_OpType_Unassign=mvindex(targetGroup_OpType_Unassign, 1)

| rename activityDisplayName as Description, resultReason as Comment

| eval targetUserUPN_CD=case(loggedByService=="Core Directory", mvindex(targetUserUPN, 0))

| eval targetUserUPN_PIM=case(loggedByService=="PIM", mvindex(targetUserUPN, 2)) | eval targetUserDN_PIM=case(loggedByService=="PIM", mvindex(targetUserDN, 2))

| replace "" with "-" in initiatedByUserUPN | replace "null" with "-" in Comment, targetGroup_OpType_Unassign, targetUserUPN_CD, targetUserUPN_PIM, targetUserDN_PIM

| eval RoleOperation=case(operationType=="ActivateAlert", "ALERT", operationType=="AdminRemovePermanentEligibleRole", "Remove Perm 'Eligible'", operationType=="AdminRemovePermanentGrantedRole", "Remove Perm 'Granted'", operationType=="AssignPermanentEligibleRole", "Add Perm 'Eligible'", operationType=="AssignPermanentGrantedRole", "Add Perm 'Granted'", operationType=="CreateRequestPermanentEligibleRole", "Add Perm 'Eligible' REQ", operationType=="CreateRequestPermanentEligibleRoleRemoval", "Remove Perm 'Eligible' REQ", operationType=="CreateRequestPermanentGrantedRole", "Add Perm 'Granted' REQ", operationType=="CreateRequestPermanentGrantedRoleRemoval", "Remove Perm 'Granted' REQ", operationType=="ResolveAlert", "Resolve ALERT", operationType=="RoleElevatedOutsidePimAlert", "ALERT Elevation w/o PIM", 1=1, operationType)

| eval initiatedBy=case(!isnull(initiatedByApp), initiatedByApp, !isnull(initiatedByUserUPN) AND !initiatedByUserUPN=="-", initiatedByUserUPN, 1=1, initiatedByUserDN)

| eval targetGroup=case(loggedByService=="PIM", mvindex(targetGroup, 0), loggedByService=="Core Directory" AND (targetGroup_OpType_Unassign=="-" OR isnull(targetGroup_OpType_Unassign)), mvindex('targetResources{}.modifiedProperties{}.newValue', 1), loggedByService=="Core Directory" AND !targetGroup_OpType_Unassign=="-" AND !isnull(targetGroup_OpType_Unassign), targetGroup_OpType_Unassign, 1=1, targetGroup)

| eval targetGroup=replace(targetGroup,"\"","")

| eval targetUser=case(!isnull(targetUserUPN_CD), targetUserUPN_CD, !isnull(targetUserDN_PIM) AND !targetUserDN_PIM=="-" AND (isnull(targetUserUPN_PIM) OR targetUserUPN_PIM=="-"), targetUserDN_PIM, 1=1, targetUserUPN_PIM)

| table _time, targetGroup, RoleOperation, result, targetUser, initiatedBy, initiatedByUserDN, Description, Comment, loggedByService, correlationId

Use the below query to locate any additional (or all) Role Management Operation Types that had surfaced in your Splunk environment for a time period you specify:

index=YuenX_Azure sourcetype="azure:aad:audit" | spath category | search category=RoleManagement | fields operationType,activityDisplayName | stats values(operationType,activityDisplayName)

Other Categories and SourceTypes

To find other available Categories to audit on, use: index=YuenX_Azure sourcetype=”azure:aad:audit” | stats count by category

Some interesting ones:

- sourcetype=”azure:aad:audit” category=UserManagement

- sourcetype=”azure:aad:audit” category=GroupManagement

Scheduled Alerts

You may want to set up a Splunk Alert to notify the Security and Azure administration teams of any changes within a specific time period so any unauthorized activity could be looked at.

- A cron job of every 45 minutes with a search of the last 46 mins worked best for my environment

- A shorter period (like 11 minutes) did not trigger an alert in my tests. One trial to check every 30 minutes also failed to catch some events

- I suspect the cause to have been due to a time delay between when an AAD log is produced, ingested into and indexed by Splunk, and then searched for via the alert schedule

- Example: An event occurred at 9:00am. Azure AD produces the log at 9:05am, gets ingested into Splunk at 9:07am, indexed at 9:10am, and scheduled search runs at 9:12am. At the time of schedule execution (9:12am), it looks back at the last 11 minutes — that includes any event from 9:01am on. Because the event had occurred at 9:00am, it was not in scope of the scheduled search and the alert was not triggered. In this example, setting the search window to 16 minutes instead would catch any event that occurred since 8:56am

- Azure AD has a tendency to not produce a log entry for as late as 20-30 minutes. You may have to adjust your scheduled search window to go beyond the last 30 minutes. Be sure to properly test in your environment. Be mindful of the time it takes for Splunk to finish indexing the logs

- I suspect the cause to have been due to a time delay between when an AAD log is produced, ingested into and indexed by Splunk, and then searched for via the alert schedule

References

- Microsoft

- Splunk